I just got back from the Workshop on Model Theoretic Representations in Phonology at Stony Brook University, organised by Jeff Heinz and Scott Nelson. I thoroughly enjoyed the workshop and learnt a lot from the talks. It was also awesome to meet so many old friends after such a long hiatus — it was my first workshop/conference after COVID-19 descended upon the world.

I was planning to go there anyway when I saw the call, so it was easy to say yes when I was invited to be a panelist. Also, I figured there was no better way to show my ignorance than be on a panel at a workshop with specialists 😄.

The panelists were given four questions to think about. I will discuss my thoughts on some of these below. The first question was the following:

- What has formal language theory and/or model theoretic phonology taught us about phonology?

Here, I was originally attracted to these lines of research because of the discussion of computational (in)tractability of phonological theories, especially, Optimality Theory (Idsardi 2006; Heinz, Kobele, and Riggle 2009). However, I haven’t seen much more in this line of research, lately.1 A second line of research, one that I am particularly excited about, is the use of model theory to compare different theories, and see if they are (contrary to what one might have impressionistically expected) actually notationally equivalent (Graf 2010; Strother-Garcia 2019; Jardine, Danis, and Iacoponi 2021, amongst others). There were a couple of talks at the workshop related to this line of inquiry: Scott Nelson presented a comparison of coupling graphs in Articulatory Phonology and segmental representations, and concluded that they are quite similar (Nelson 2022).2 Nick Danis presented a comparison of two different feature systems and showed that despite bi-directional QF logical transduction being possible, the two different feature systems could still have very different natural class behaviour (Danis 2022).3 I think this line of inquiry into notational equivalence is generally quite exciting for theorists, and I hope to see more of it!

Another couple of questions we were asked to think about prior to the workshop were related and I will address the two questions together below.

- Where have practitioners of formal language theory and/or model theoretic phonology overpromised?

- Where does formal language theory and/or model theoretic phonology fall short because of inherent limitations in the approach? In other words, what can’t it ever hope to explain?

First, it’s not clear that trying to identify the complexity class a particular (set of) observed phonological patterns belong to gets to where we want to be. I often see phrases like the “complexity of phonology is known to be X”, which is quite a ways away from the “complexity of observed phonological patterns is known (perhaps, some weaker word that known is required here) to be X”. The former is an ampliative inference from the latter, and in fact quite a sizeable jump from the latter.

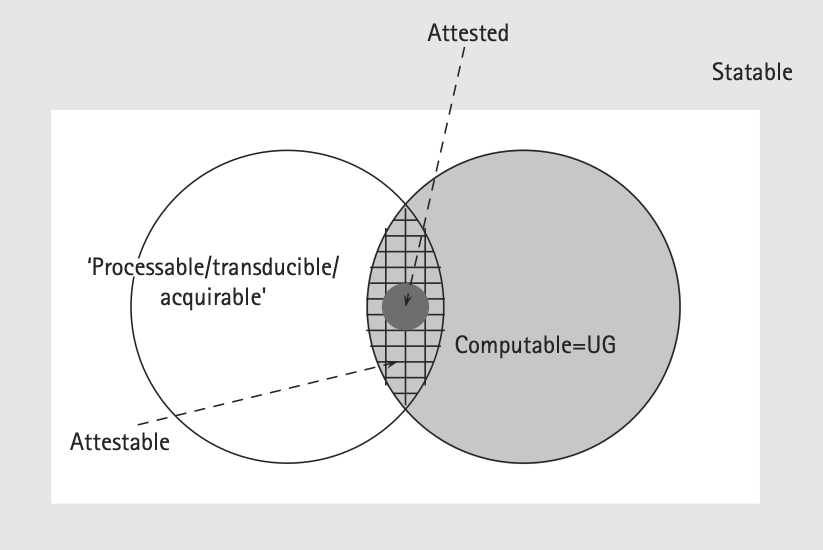

Let me clarify. Trying to find the upper bound on the complexity of the set of observed phonological patterns itself doesn’t tell us much about the upper bound on the complexity of phonological computations, for the trivial reason that the observed patterns are an intersection of multiple factors, of which the phonological grammar is just one. As Reiss (2007) pointed out, attested languages/patterns are a subset of the attestable languages/patterns which themselves are an intersection of the phonologically computable patterns and many other performance factors. If anything, observed upper bounds on observed phonological patterns are likely to be lower bounds for the computational system of phonology, given that the observed patterns are a result of the intersection of multiple factors beyond the computational system itself.4

Figure 1: An image from Reiss (2007)

Furthermore, it can’t simply be that typological rareness informs us of the computational system. Take for example the fact that center-embedded sentences are difficult to process and therefore likely not present (i.e., rare) in observed data, but surely this absence should not be modelled as stemming from the grammar.

The underdetermination problem is serious, and we need to pay a lot more attention to it. So, the main question is how do we get at the grammar beyond an ampliative inference based on typological data?

One stategy that I am deeply sympathetic to that Jon Rawski and Lucie Baumont proposed in their talk is that we need more (potentially, a priori) rationalist restrictions on the space of potential grammars/patterns. We can call this a constraint from above. We need to think about constraints (computational or otherwise) that are sensible starting points to whittle down the space of possible grammars.

A second way to solve this problem is to be more creative with respect to probing the cognitive object (Phonological computation) in many more ways than just typological data — typological data simply doesn’t get to the relevant depth for us to understand the cognitive object. We need multiple independent ways to assess that the claim is right (aka, triangulation). Otherwise, the enterprise runs the risk of becoming a curve-fitting exercise. Another way to think about this problem is by thinking of the machine learning concepts of training sets and test sets. When we use observed patterns to come up with a computational complexity upper-bound, all we are doing in some sense is fitting a model to a training set, but we really need independent test sets to see if what we say has some meat, or if we are just curve-fitting.

One type of test set some have tried is from artificial language experiments; however, these are typically run on adults, and it is not obvious that we are learning much about the restrictions on the acquisition device or the computational system by looking at adults in an artificial language setting, which in the best case becomes a case of L2 learning, and in the worst case becomes a case of puzzle solving. I say this as someone who has done a fair few of them myself. In fact, there is at least some evidence that suggests that looking at adults in is not the same as looking at children in artificial language experiments (Schuler, Yang, and Newport 2016). Even if we put this worry aside, as many who run such experiments (including me!!) are wont to do, there is still unclarity about what the linking hypotheses are? In some places, it is that anything beyond the proposed upper-bound complexity class can’t be learnt in artificial language experiments (Lai 2015; McMullin 2016, amongst others), and in other places it is that it will be more difficult to learn something outside the space (Avcu and Hestvik 2020).5 These are quite different hypotheses, and both of them are at best ceteris paribus predictions; however, in reality, the ceteris is never paribus. It is not so simple to compare two such patterns, as we need to make the assumption that all other (performance) factors treat the two patterns the same. For example, take the case of first-last sibilant harmony vs. regular sibilant harmony in the above papers. Is it really the case that other performance factors (learning algorithm, working memory, …) treat the two patterns the same? It is only if they do that we can claim that the latter is easier to learn than the former purely due to the computational system. Either way, it is important to flesh out the linking hypothesis much more clearly, so that we can interpret the results.

A second issue worth thinking about is that sometimes I get the feeling that practitioners take the prior (somewhat atheoretical) descriptions to be sacrosanct, and then proceed to assess their computational complexity. However, as I see it, a part of the scientific responsibility is to actually figure out what the facts themselves are. And what I would like to see is more input from the community towards understanding what the facts themselves are.6

Relatedly, we need cases where the relevant theories (computational or otherwise) force us to reconceptualise the data7, and then crucially show evidence in favour of that reconceptualisation. That is something we should really be after. Many times, what seems a relevant fact from one perspective or theory is not so from another perspective or theory. If we are able to cut the descriptive pie differently from what was assumed before and show evidence for the new way to cut the pie, that would go some ways towards us gaining belief that the theory is interesting/productive. However, for this to happen, again, we can’t be beholden to the observed phonological patterns, as it is entirely possible that such descriptions are mischaracterisations of the actual facts.

The above thoughts make it sound like I am not particularly sanguine about the work at the workshop; however, nothing could be further from the truth. While I am an outside observer, I am also deeply sympathetic to the cause. It is more that I would like to push the community more along the above lines. Furthermore, I think Jeff and Scott were awesome to include questions critical of the endevour — we need more such researchers willing to be self-critical. Finally, the observations are largely generic, and exactly the same problems plague anyone interested in establishing competence claims, so I don’t want to say the above are issues faced uniquely by those interested in formal language theory and model theoretic phonology.

It is entirely possible that this is just my ignorance.↩︎

This, however, depends on how one treats the issue of the continuous/gradient information in parameter values related to spatio-temporal targets (such as stiffness) that are allowed in Articulatory Phonology and are not explicitly represented in coupling graphs.↩︎

On the specific issue of assimilation, Kyle Gorman asked the very pertinent question of why a phonologist should necessarily worry about the assimilation patterns possible in different feature systems, as the worry pre-supposes that the relevant description of assimilation is appropriate. Charles Reiss, Kyle and I talked about this later, and discussed how practically any theory of phonology has to have an independent way to insert features/segments; therefore, the non-identity of potential assimilation patterns between two feature theories might not mean much unless we can show independently that an assimilation, as opposed to a feature change, has indeed taken place.↩︎

Unless I am mistaken, Jon Rawski made a similar point in his co-authored talk with Lucie Baumont titled ``Phonology and the Linguistic Swampland’’.↩︎

I myself think the latter is likely to be more reasonable given that I don’t think general pattern learning abilities can just be switched off in a linguistic task.↩︎

A particularly nice example of this is from Heinz and Idsardi (2010), who found that the standard description of the Samala (also known as Chumash) sibilant harmony pattern is actually different (in crucial ways) from the original description.↩︎

This was attempted by Andrew Lamont in his talk on ``The phonotactics of nasal cluster dissimilation’’, who used a theory first approach to say that the patterns looked a lot like they belonged to the TSL class, so it would be worthwhile to force it into viewing it as such. Although, I don’t think the recharacterisation of the data was quite right. But, I am deeply sympathetic to the attempt.↩︎